ASL Typing AI Platform

By Andrew Xavier, Alban Dietrich, Kenneth Munyuza, and Sam D. Brosh, Columbia University

Video, Paper, and Repository

Introduction

American Sign Language (ASL) serves as a vital communication bridge for the deaf community. While ASL primarily uses hand gestures instead of spoken words, its detection has become a focal point in image processing and classification. Recognizing the challenges and the tedious learning curve associated with ASL, our team introduced the ASL Detection, Correction, and Completion (ASLDCC) application. This innovative tool, compatible with both computers and smartphones, aims to make ASL communication more accessible and efficient.

About ASLDCC

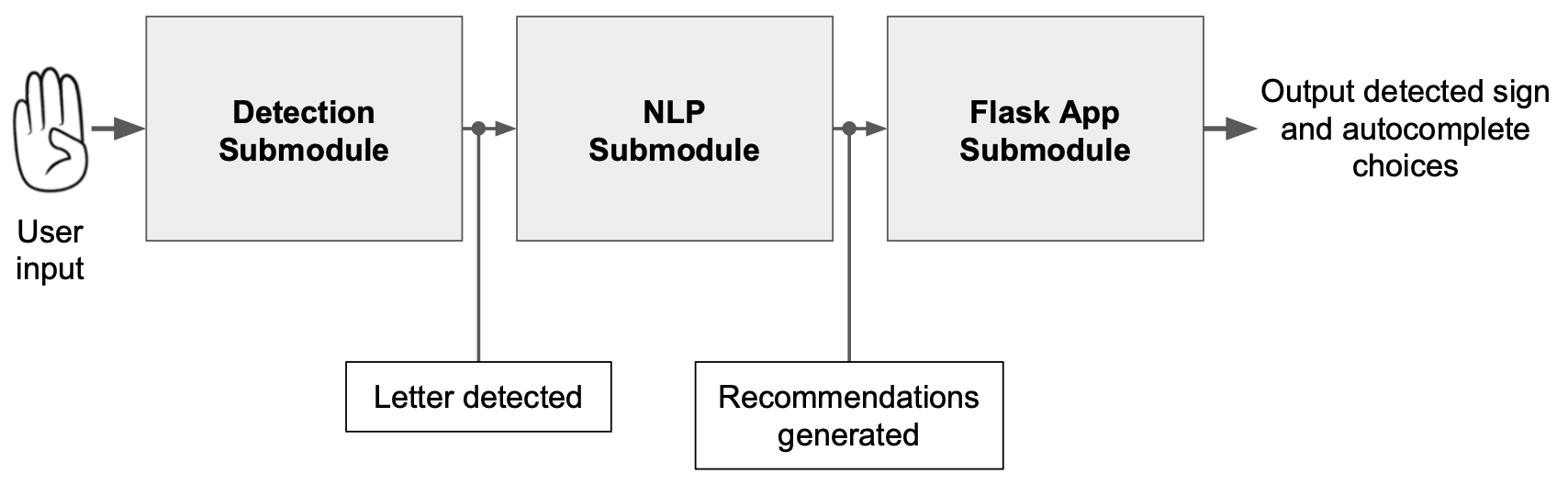

ASLDCC is equipped with three main submodules:

Detection Submodule: Recognizes the user's hand signs.

Natural Language Processing (NLP) Submodule: Suggests corrections or completions based on detected signs.

Full-Stack Submodule: Presents the detected letters and recommendations in a user-friendly interface.

The application is especially beneficial for users with basic interfaces, eliminating the need for a keyboard. In an era dominated by Augmented Reality (AR), ASLDCC ensures that signers can communicate without fretting over errors or prolonged signing times.

How It Works

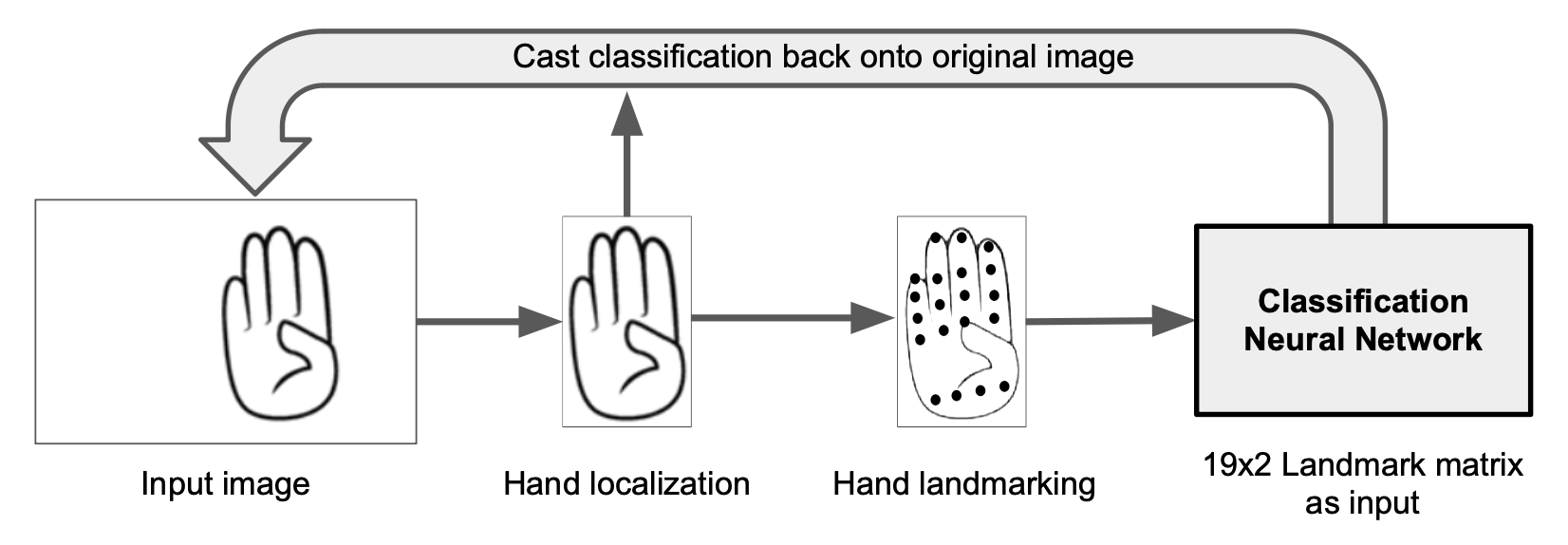

- Detection: Utilizing MediaPipe, the application detects hand landmarks in an image (in this case, frames from the live video feed). This data was used to train a convolutional neural network (CNN) to identify ASL representations of alphabet letters. During inference, the predictions are aggregated over frames to ensure the signed word is intentional

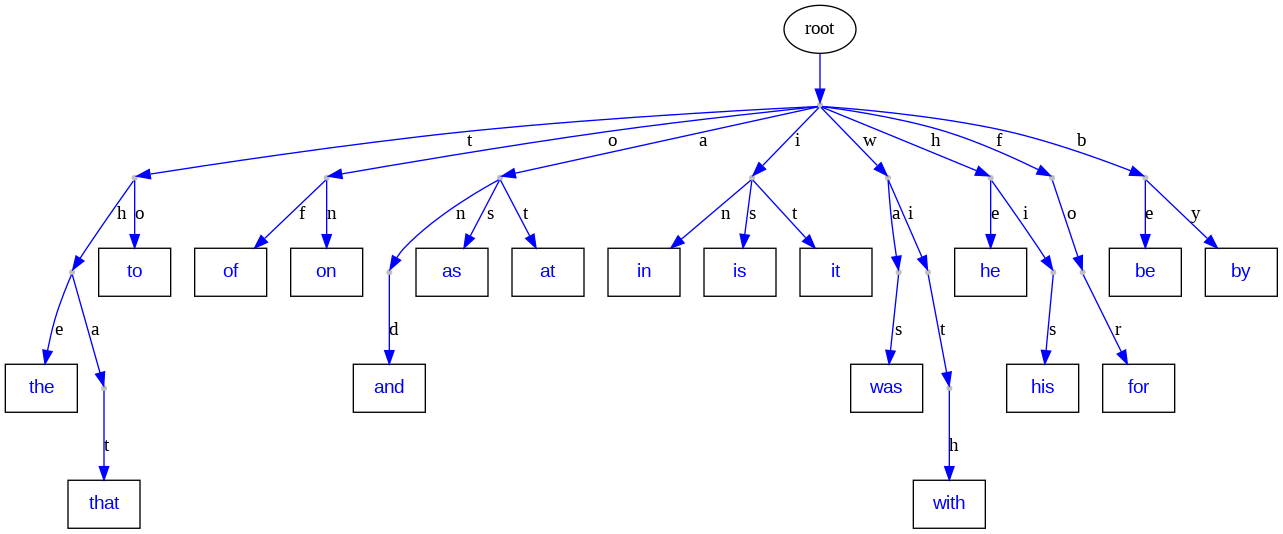

- NLP Processing: Depending on the detected signs, the NLP algorithm suggests possible word completions. For instance, if the letter "A" is detected, the algorithm might suggest "Able", "Apple", or "Apply".] The possible words for a given input are generated by using a DAWG. The top 3 words of those are determined by a statistical model using the brown corpus as a reference set

.png)

- Full-Stack Integration: The entire process is seamlessly integrated into a user-friendly interface, allowing users to view detected letters and select word suggestions

-7f229973.png)

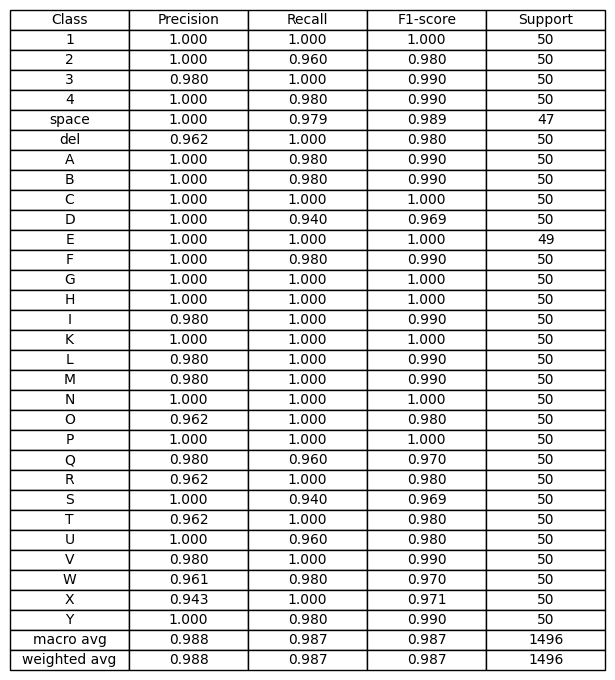

Performance & Results

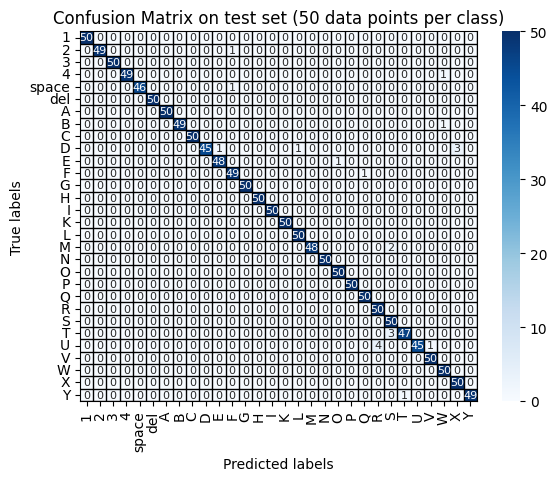

Our ASL classification model, trained on diverse datasets, achieved **98.3% accuracy and an average f1-score of 0.985 across all classes. The application's efficacy was further demonstrated through its real-time performance on both mobile and web platforms. Notably, the web application provided slightly enhanced accuracy due to the stability of laptop configurations.

Figure 5 + 6: Performance of CV Detection Module

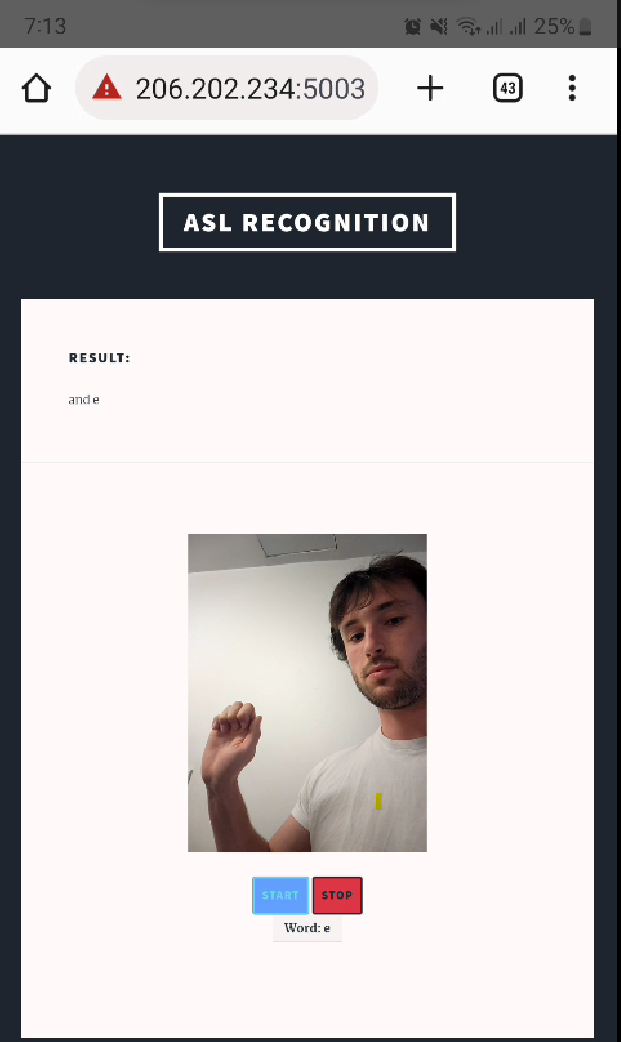

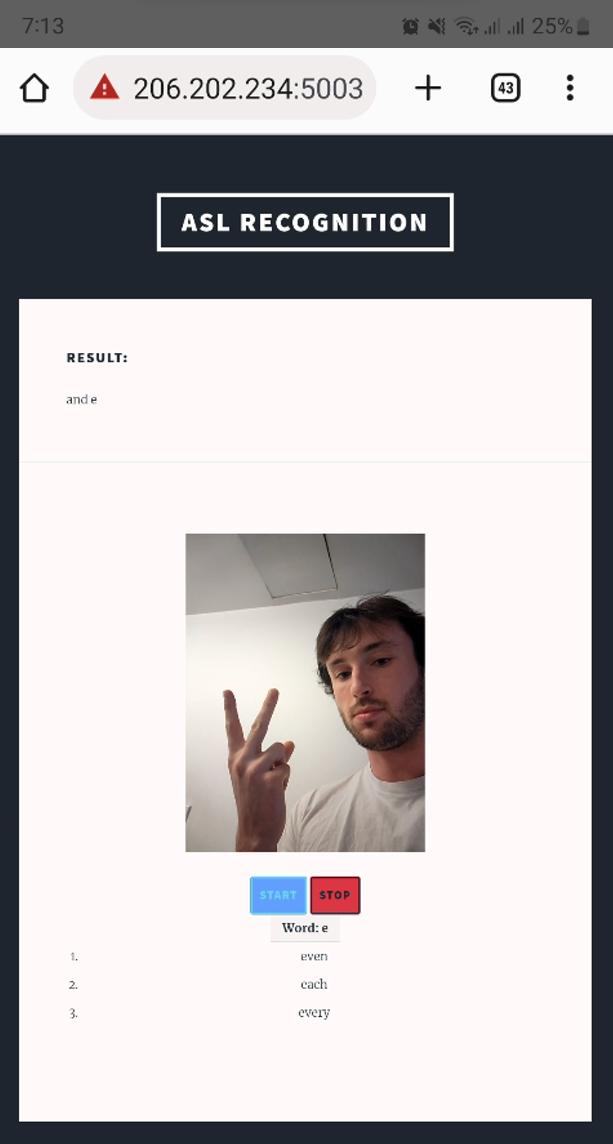

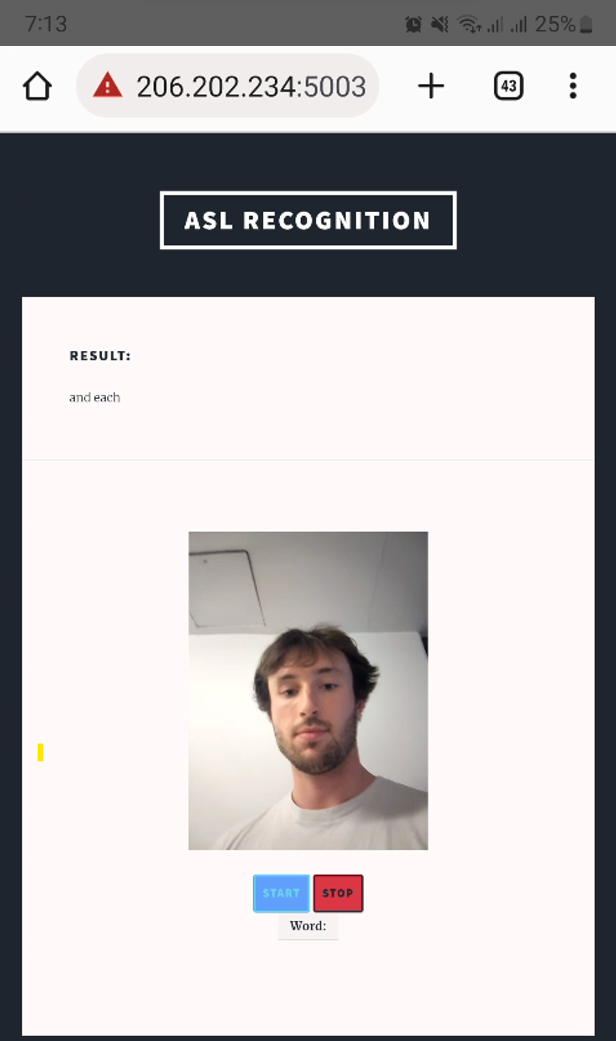

Example of Final Product on Mobile Device:

- User signs "E" and intends to type "each"

- User signs "2" to select the second auto-complete option, "each"

- "Each" is added to the current result message

Conclusion & Future Prospects

ASLDCC stands as a stride in addressing the communication challenges faced by the deaf community.

Future enhancements include refining the dataset, introducing hand filter mechanisms to eliminate background distractions, and expanding the model to recognize full ASL words, which involve dynamic hand movements.